I am a Deep Learning Researcher at KRAFTON AI, mentored by Kangwook Lee. Also, I am a Ph.D. candidate at Korea Advanced Institute of Science and Technology (KAIST), advised by Jaegul Choo.

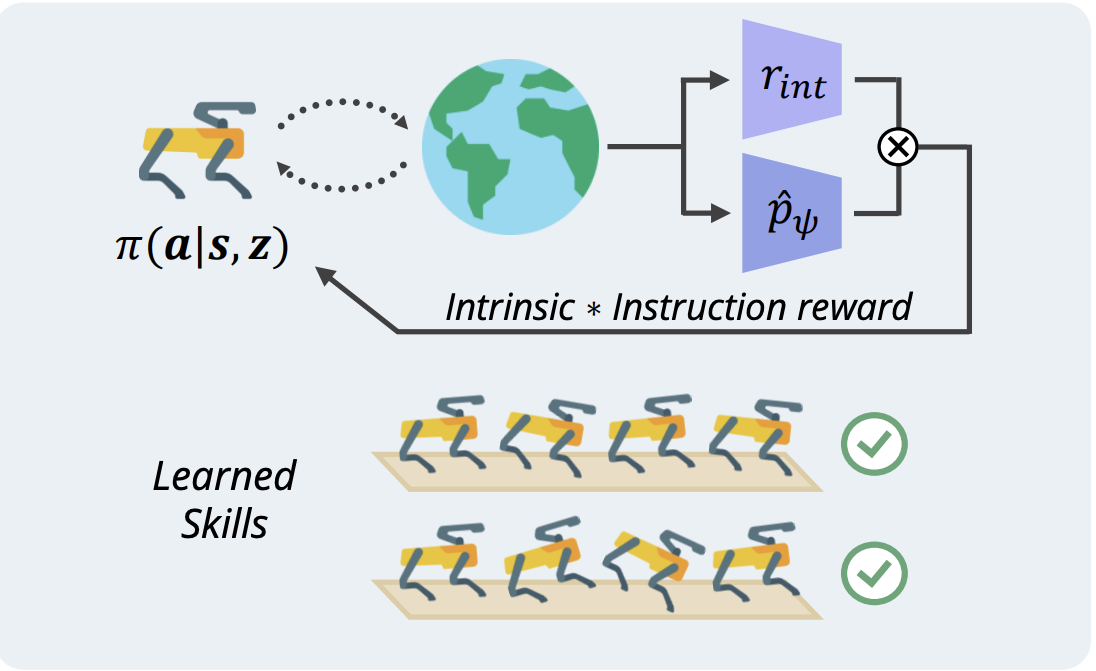

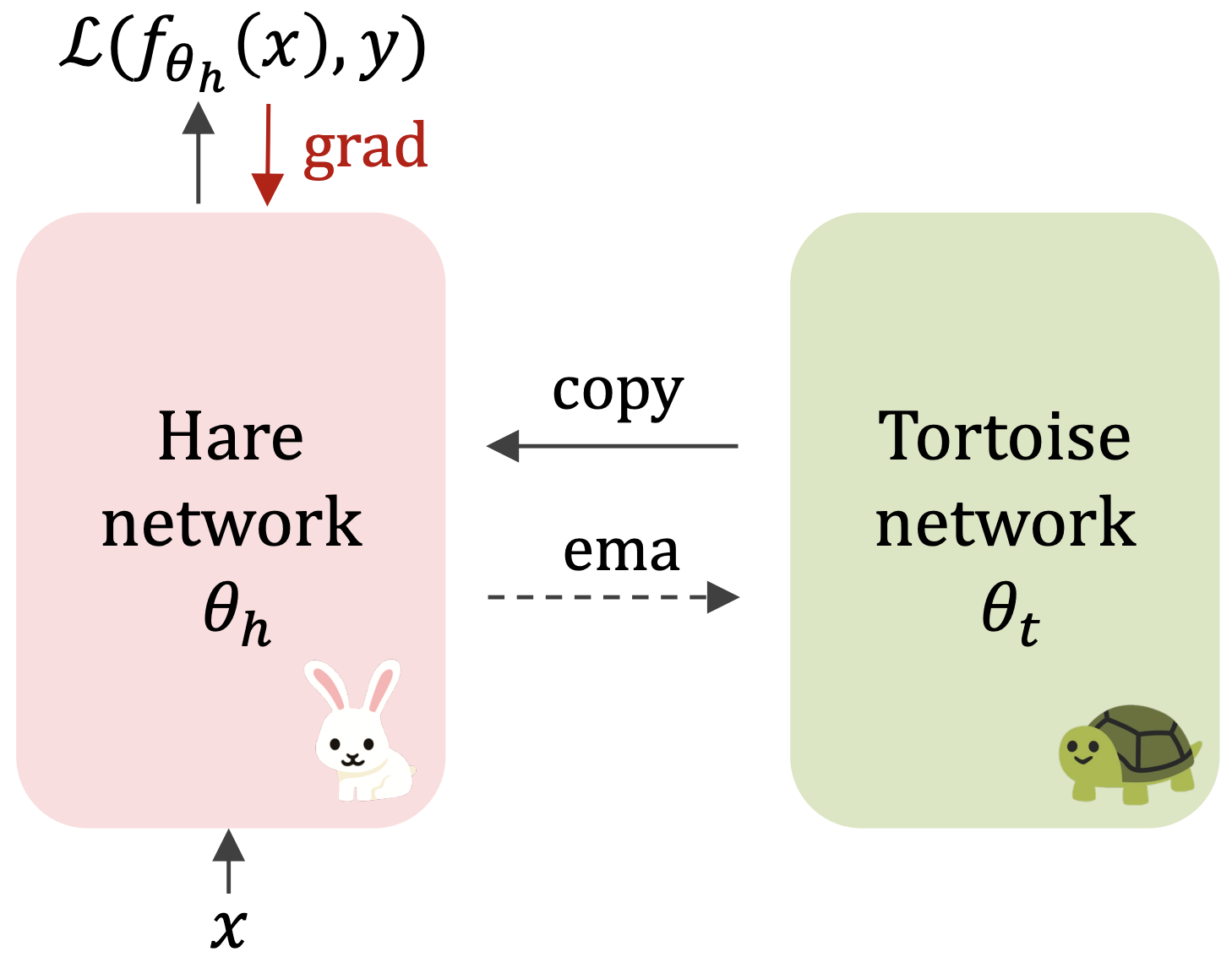

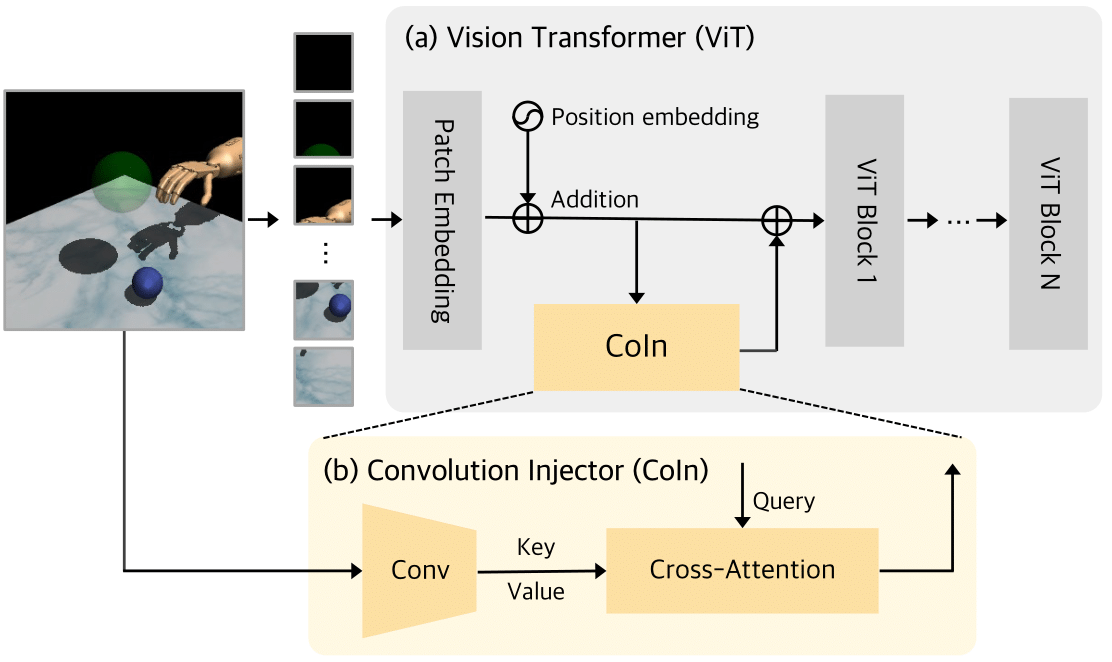

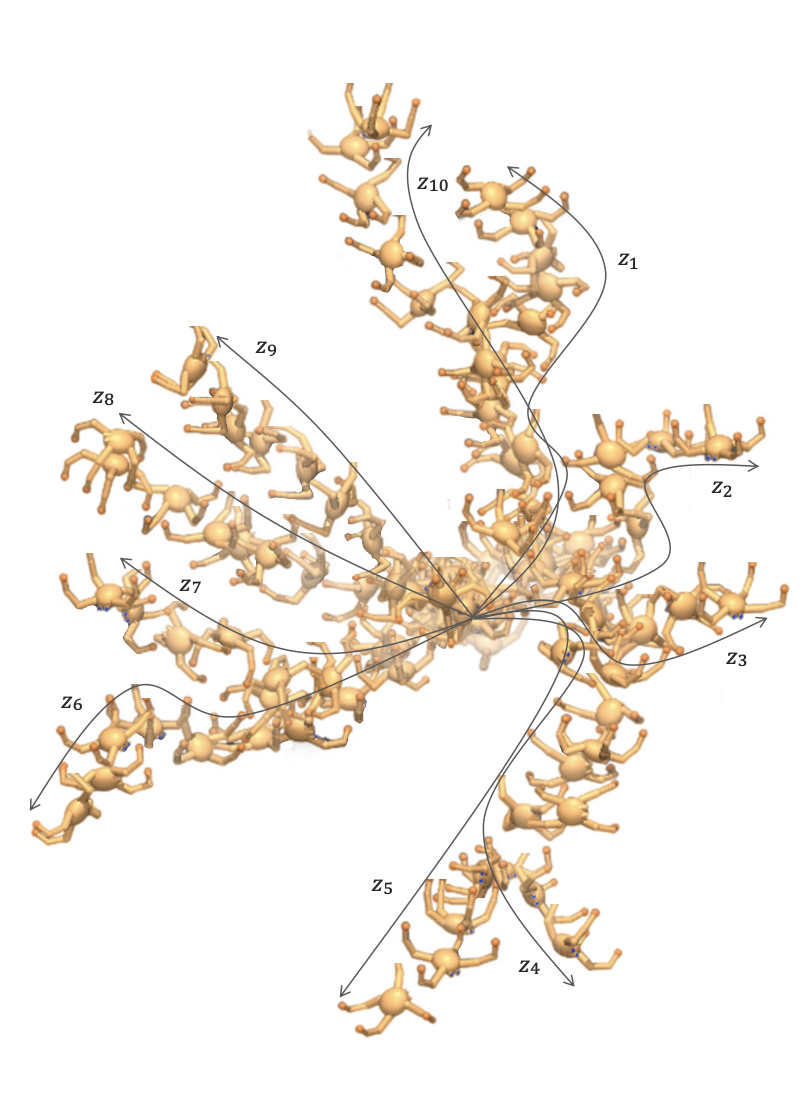

My ultimate goal is to build intelligent embodied agents capable of natural human interaction. I aim to create agents that understand their environment, make context-aware decisions, and continually improve through experience.

I pursue this vision in games by developing Co-playable Character (CPC), such as PUBG Ally. Specifically, I focus on designing LLM/SLM-based agents that serve as intelligent embodied companions in interactive environments.

Email / CV / Google Scholar / Linkedin / Github